Detecting iPhone Touch Screen Gesture Motions

| Previous | Table of Contents | Next |

| An Example iPhone Touch, Multitouch and Tap Application | Drawing iPhone 2D Graphics with Quartz |

<google>BUY_IOS3</google>

The last area of touch screen event handling that we will look at in this book involves the detection of gestures involving movement. As covered in a previous chapter, a gesture refers the activity that takes place in the time between a finger touching the screen and the finger then being lifted from the screen. In the chapter entitled An Example iPhone Touch, Multitouch and Tap Application we dealt with touches that did not involve any movement across the screen surface. We will now create an example that tracks the coordinates of a finger as it moves across the screen.

The assumption is made throughout this chapter that the reader has already reviewed the Overview of iPhone Multitouch, Taps and Gestures chapter of this book.

Contents | ||

The Example iPhone Gesture Application

This example application will detect when a single touch is made on the screen of the iPhone and then report the coordinates of that finger as it is moved across the screen surface.

Creating the Example Project

Start the Xcode environment and select the option to create a new project using the View-based application template option and name the project touchMotion.

Creating Outlets

The application will display the X and Y coordinates of the touch and update these values in real-time as the finger moves across the screen. When the finger is lifted from the screen, the start and end coordinates of the gesture will then be displayed. This will require the creation of two outlets that will later be connected to labels within the user interface of the application. In addition, we need to add an instance variable to our view controller in which to store the start position of the touch. To create these outlets and the instance variable, select the touchMotionViewController.h file and modify it as follows:

#import <UIKit/UIKit.h>

@interface touchMotionViewController : UIViewController {

UILabel *xCoord;

UILabel *yCoord;

CGPoint startPoint;

}

@property (retain, nonatomic) IBOutlet UILabel *xCoord;

@property (retain, nonatomic) IBOutlet UILabel *yCoord;

@property CGPoint startPoint;

@end

Next, edit the touchMotionViewController.m file and add the @synthesize directive:

#import "touchMotionViewController.h" @implementation touchMotionViewController @synthesize xCoord, yCoord, startPoint; . . . @end

Once the code changes have been implemented be sure to save both files before proceeding to the user interface design phase.

Designing the Application User Interface

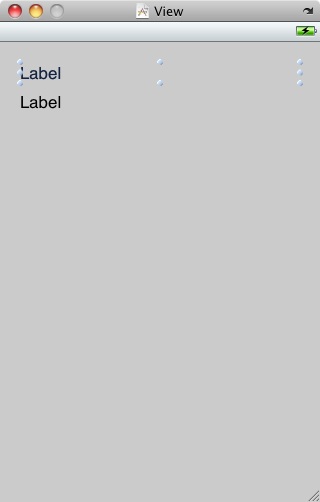

Double click on the touchMotionViewController.xib file to load the NIB file into Interface Builder. Within Interface Builder, create a user interface such that it resembles the layout in the following figure:

Be sure to stretch the labels so that they both extend to cover most of the width of the view. Once the label objects have been added to the view, establish a connection between the xCoord outlet and the top label by Crtl-clicking on the File’s Owner and dragging the blue line to the top label. Release the pointer and select xCoord from the resulting menu. Repeat this step for the yCoord outlet and save the design before exiting from Interface Builder.

Implementing the touchesBegan Method

When the user first touches the screen the location coordinates need to be saved in the startPoint instance variable and the coordinates reported to the user. This can be achieved using the following code in the touchMotionViewController.m file:

- (void) touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event {

UITouch *theTouch = [touches anyObject];

startPoint = [theTouch locationInView:self.view];

CGFloat x = startPoint.x;

CGFloat y = startPoint.y;

xCoord.text = [NSString stringWithFormat:@"x = %f", x];

yCoord.text = [NSString stringWithFormat:@"y = %f", y];

}

Implementing the touchesMoved Method

When the user user’s finger moves across the screen the touchesMoved event will be called repeatedly until the motion stops. By implementing the touchesMoved method in our view controller, we can detect the motion and display the revised coordinates to the user:

- (void) touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event {

UITouch *theTouch = [touches anyObject];

CGPoint touchLocation = [theTouch locationInView:self.view];

CGFloat x = touchLocation.x;

CGFloat y = touchLocation.y;

xCoord.text = [NSString stringWithFormat:@"x = %f", x];

yCoord.text = [NSString stringWithFormat:@"y = %f", y];

}

Implementing the touchesEnded Method

When the user user’s finger lifts from the screen the touchesEnded method of the first responder is called. The final task, therefore, is to implement this method in our view controller such that it displays the start and end points of the gesture:

- (void) touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event {

UITouch *theTouch = [touches anyObject];

CGPoint endPoint = [theTouch locationInView:self.view];

xCoord.text = [NSString stringWithFormat:@"start = %f, %f", startPoint.x, startPoint.y];

yCoord.text = [NSString stringWithFormat:@"end = %f, %f", endPoint.x, endPoint.y];

}

Building and Running Gesture Example

Build and run the application using the Build and Run button located in the toolbar of the main Xcode project window. When the application starts (either in the Simulator or on a physical device) touch the screen and drag to a new location before lifting your finger from the screen (or mouse button in the case of the iPhone simulator). During the motion the current coordinates will update in real time. Once the gesture is complete the start and end locations of the movement will be displayed.

Summary

Simply by implementing the standard touch event methods the motion of a gesture can easily be tracked by an iPhone application. The same concepts as those outlined here can also be used to track the motion of multiple touches and to use mathematical algorithms to detect, for example, whether the gesture is a vertical or horizontal swipe, pinch or stretch motion.

<google>BUY_IOS3_BOTTOM</google>

| Previous | Table of Contents | Next |

| An Example iPhone Touch, Multitouch and Tap Application | Drawing iPhone 2D Graphics with Quartz |