Android Gesture and Pinch Recognition on the Kindle Fire

| Previous | Table of Contents | Next |

| Android Touch and Multi-touch Event Handling | An Overview of Android Intents |

<google>BUY_KINDLE_FIRE</google>

The term “gesture” is used to define a single interaction between the touch screen and the user. A gesture begins at the point that the screen is touched and ends when the last finger or pointing device leaves the display surface. When correctly harnessed, gestures can be implemented as a form of communication between user and application. Swiping motions to turn the pages of an eBook, or a pinching movement involving two touches to zoom in or out of an image are prime examples of the ways in which gestures can be used by the user to interact with an application.

The Android SDK allows custom gestures to be defined by the application developer and used to trigger events when performed by the user. This is a multistage process, the details of which are the topic of this chapter.

The Android Gestures Builder Application

The Android SDK allows developers to design custom gestures that are then stored in a gesture file bundled with an application. Gestures are most easily designed using the Gestures Builder application bundled with the Android Virtual Device (AVD) emulator environment. The creation of a gestures file involves running an AVD session that includes SD card support, locating and launching the Gestures Builder application and then “drawing” the gestures that will need to be detected by the application. Once the gestures have been designed, the file containing the gesture data can be pulled off the virtual SD card and added to the application project. Within the application code, the file is then loaded into a GestureLibrary instance where it can be used to search for matches to gestures performed by the user.

The GestureOverlayView Class

In order to facilitate the detection of gestures within an application, the Android SDK provides the GestureOverlayView class. This is a transparent view that can be placed over other views in the user interface for the sole purpose of detecting gestures.

Detecting Gestures

Gestures are detected by loading the gestures file created using Gestures Builder and then registering a GesturePerformedListener event listener on an instance of GestureOverlayView. The enclosing class is then declared to implement the OnGesturePerformedListener interface and the onGesturePerformed callback method required by that interface implemented. In the event that a gesture is detected by the listener, the onGesturePerformed callback method is called by the runtime system.

Identifying Specific Gestures

When a gesture is detected, the onGesturePerformed callback method is called and passed as arguments a reference to the GestureOverlayView object on which the gesture was detected, together with a Gesture object containing information about the gesture that was detected.

With access to the Gesture object, the GestureLibrary can then be used to compare the detected gesture to those contained in the gestures file previously loaded into the application. The GestureLibrary reports the probability that the current gesture matches an entry in the gestures file by calculating a prediction score for each gesture. A prediction score of 1.0 or greater is generally accepted to be a good match for the gesture.

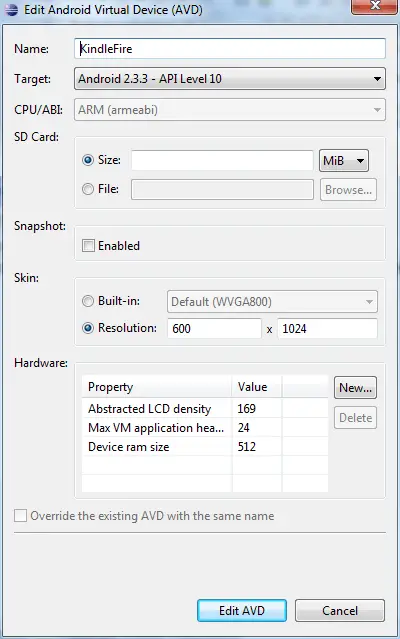

Adding SD Card Support to an AVD

Before the Gestures Builder can be used to design gestures, an AVD needs to be configured with a virtual SD Card onto which the gestures file will be saved. For the purposes of this example, the Kindle Fire AVD created in the chapter entitled Creating a Kindle Fire Android Virtual Device (AVD) will be modified to add SD Card support. Within the Eclipse environment, therefore, select the Window -> AVD Manager menu option. Within the resulting Android Virtual Device Manager screen, select an AVD (in this case the Kindle Fire device) and click on the Edit button to display the editing dialog as illustrated in Figure 17-1:

Figure 17-1

Within the configuration dialog, click on the New… button located to the right of the Hardware section and, in the resulting dialog, select SD Card Support from the Property menu before clicking on OK. On returning to the editing dialog, enter a value of 10 MiB into the Size field of the SD Card section of the dialog, then click on the Edit AVD button to commit the changes. The AVD now has virtual SD Card storage available to store the gestures file.

Creating a Gestures File

The next step is to design some gestures and save them into a gestures file on the AVD’s virtual SD Card. As previously discussed, gestures can be created using the Gestures Builder application which is supplied as part of the AVD environment. Begin, therefore, by launching an instance of the Kindle Fire AVD modified in the previous section (this can be achieved simply by running one of the applications created in a previous chapter). Once the AVD session is running, press the Home key on your keyboard to display the home screen. On the home screen, select the grid pattern in the middle of the bottom panel (located to between the phone and planet earth icons):

Figure 17-2

Once selected, the screen should switch to display all of the applications currently installed on the virtual device, one of which should be the Gestures Builder, the icon for which illustrated in Figure 17-3:

Figure 17-3

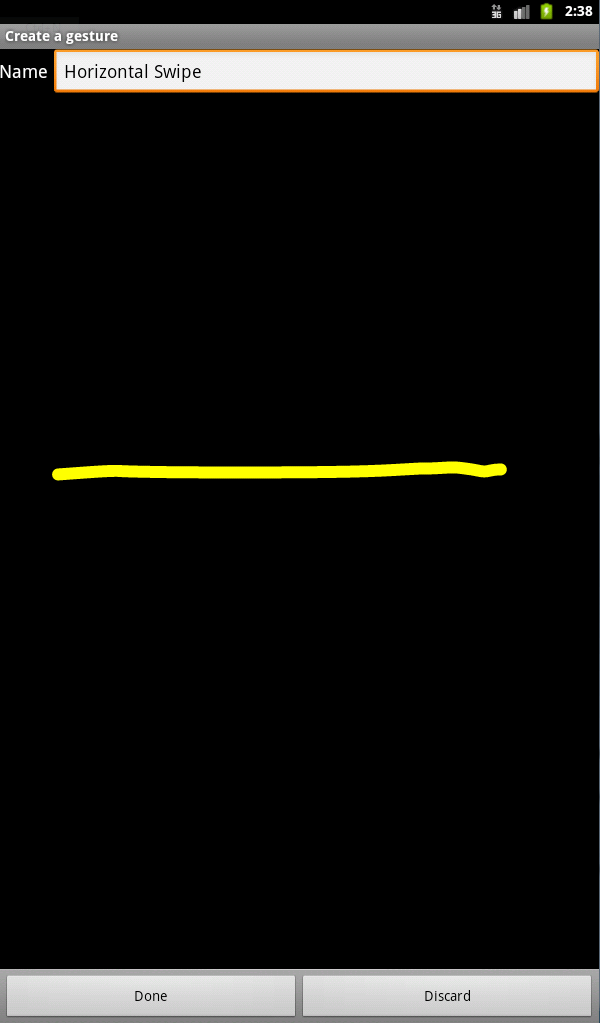

Click on the Gestures Builder icon to start the application. Once loaded, the application should indicate that no gestures have yet been created. To create a new gesture, click on the Add gesture button located at the bottom on the device screen, enter the name Horizontal Swipe into the Name text box and then click and drag the mouse pointer across the screen in a horizontal, left to right motion as illustrated in Figure 17-4. Assuming that the gesture appears as required (represented by the yellow line on the virtual device screen), click on the Done button to add the gesture to the gestures file:

Figure 17-4

After the gesture has been saved, the gestures builder will display a list of currently defined gestures which, at this point, will consist solely of the new Horizontal Swipe gesture.

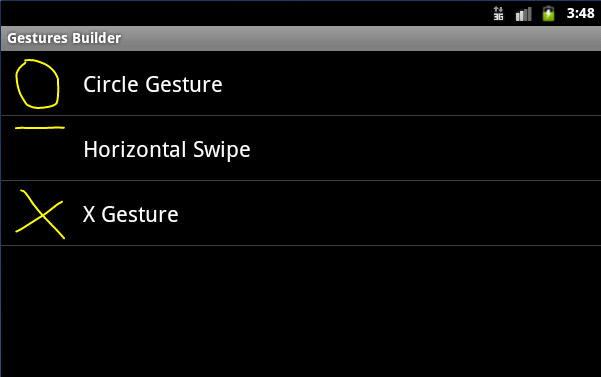

Repeat the gesture creation process to add two further gestures to the file. One should involve a circular motion gesture (named Circle Gesture) and the third a two stroke gesture creating an X on the screen named (X Gesture). When creating gestures involving multiple strokes be sure to allow as little time as possible between each stroke so that the builder knows that the strokes are part of the same gesture. Once these gestures have been added, the list within the Gestures Builder application should resemble that outlined in Figure 17-5:

Figure 17-5

Extracting the Gestures File from the SD Card

As each gesture was created within the Gesture Builders application it was added to a file named gestures which, in turn, resides on the virtual SD Card of the AVD. Before this file can be added to an Android project, however, it must first be pulled off the SD Card and saved to the local file system. This is most easily achieved by using the adb command-line tool. Open a Terminal or Command Prompt window and execute the following command:

adb devices

In the event that the adb command is not found, refer to Setting Up a Kindle Fire Android Development Environment for guidance on adding this to the PATH environment of your system.

Once executed, the command will list all active physical devices and AVD instances attached to the system. The following output, for example indicates that a physical device and one AVD emulator are present on the system:

List of devices attached emulator-5554 device 74CE000600000001 device

In order to pull the gestures file from the emulator in the above example and place it into the current working directory of the Terminal or Command Prompt window, the following command would need to be executed:

adb -s emulator-5554 pull /sdcard/gestures .

Once the gestures file has been created and pulled off the SD Card it is ready to be added as a project resource file. The next step, therefore, is to create a new project.

Creating the Example Project

Within the Eclipse environment, perform the usual steps to create an Android project named GestureEvent with an appropriate package name using the Android 2.3.3, level 10 SDK and including a template activity.

Within the Package Explorer panel, locate the new project, right-click (Ctrl-click on Mac OS X) on the res folder and select New -> Folder from the resulting menu. In the New Folder dialog, enter raw as the folder name and click on the Finish button. Using the appropriate file explorer for your operating system type, locate the gestures file pulled from the SD Card in the previous section and drag and drop it into the new raw folder.

When the File Operations dialog appears, make sure that the option to Copy files is selected prior to clicking on the OK button.

Designing the User Interface

This example application calls for a very simple user interface consisting of a LinearLayout view with a GestureOverlayView layered on top of it to intercept any gestures. Locate the res -> layout -> main.xml file and double click on it to load it into the main panel. Select the TextView object in the user interface layout and delete it.

Next, select the Advanced section of the Palette and drag and drop a GestureOverlayView object onto the Layout canvas and resize it to fill the entire layout area. Change the ID of the GestureOverlayView object to gOverlay. When completed, the main.xml file should read as follows:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

<android.gesture.GestureOverlayView

android:id="@+id/gOverlay"

android:layout_width="fill_parent"

android:layout_height="fill_parent" >

</android.gesture.GestureOverlayView>

</LinearLayout>

Loading the Gestures File

Now that the gestures file has been added to the project, the next step is to write some code so that the file is loaded when the activity starts up. For the purposes of this project, the code to achieve this will be placed in the onCreate() method of the GestureEventActivity class located in the GestureEventActivity.java source file:

package com.ebookfrenzy.GestureEvent;

import android.app.Activity;

import android.gesture.GestureLibraries;

import android.gesture.GestureLibrary;

import android.os.Bundle;

public class GestureEventActivity extends Activity implements OnGesturePerformedListener {

/** Called when the activity is first created. */

private GestureLibrary gLibrary;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

gLibrary = GestureLibraries.fromRawResource(this, R.raw.gestures);

if (!gLibrary.load()) {

finish();

}

}

}

The above code changes to the onCreate() method declare a GestureLibrary instance named gLibrary and then load into it the contents of the gestures file located in the raw resources folder. The activity class has also been modified to implement the OnGesturePerformedListener interface, which requires the implementation of the onGesturePerformed callback method (which will created in a later section of this chapter).

Registering the Event Listener

In order for the activity to receive notification that the user has performed a gesture on the screen, it is necessary to register the OnGesturePerformedListener event listener on the gLayout view, a reference to which can be obtained using the findViewById method as outlined in the following code:

package com.ebookfrenzy.GestureEvent;

import android.app.Activity;

import android.gesture.GestureLibraries;

import android.gesture.GestureLibrary;

import android.gesture.GestureOverlayView;

import android.gesture.GestureOverlayView.OnGesturePerformedListener;

import android.os.Bundle;

public class GestureEventActivity extends Activity implements OnGesturePerformedListener {

/** Called when the activity is first created. */

private GestureLibrary gLibrary;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

gLibrary = GestureLibraries.fromRawResource(this, R.raw.gestures);

if (!gLibrary.load()) {

finish();

}

GestureOverlayView gOverlay =

(GestureOverlayView) findViewById(R.id.gOverlay);

gOverlay.addOnGesturePerformedListener(this);

}

}

Implementing the onGesturePerformed Method

All that remains before an initial test run of the application can be performed is to implement the OnGesturePerformed callback method which will be called when a gesture is performed on the GestureOverlayView instance:

package com.ebookfrenzy.GestureEvent;

import java.util.ArrayList;

import android.app.Activity;

import android.gesture.Gesture;

import android.gesture.GestureLibraries;

import android.gesture.GestureLibrary;

import android.gesture.GestureOverlayView;

import android.gesture.GestureOverlayView.OnGesturePerformedListener;

import android.gesture.Prediction;

import android.os.Bundle;

import android.widget.Toast;

public class GestureEventActivity extends Activity implements OnGesturePerformedListener {

/** Called when the activity is first created. */

private GestureLibrary gLibrary;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

gLibrary = GestureLibraries.fromRawResource(this, R.raw.gestures);

if (!gLibrary.load()) {

finish();

}

GestureOverlayView gOverlay = (GestureOverlayView) findViewById(R.id.gOverlay);

gOverlay.addOnGesturePerformedListener(this);

}

public void onGesturePerformed(GestureOverlayView overlay, Gesture gesture) {

ArrayList<Prediction> predictions = gLibrary.recognize(gesture);

if (predictions.size() > 0 && predictions.get(0).score > 1.0) {

String action = predictions.get(0).name;

Toast.makeText(this, action, Toast.LENGTH_SHORT).show();

}

}

}

When a gesture on the gesture overlay view object is detected by the Android runtime, the onGesturePerformed method is called. Passed through as arguments are a reference to the GestureOverlayView object on which the gesture was detected and an object of type Gesture. The Gesture class is designed to hold the information that defines a specific gesture (essentially a sequence of timed points on the screen depicting the path of the strokes that comprise a gesture).

The Gesture object is passed through to the recognize() method of our gLibrary instance, the purpose of which is to compare the current gesture with each gesture loaded from the gestures file. Once this task is complete, the recognize() method returns an ArrayList object containing a Prediction object for each comparison performed. The list is ranked in order from the best match (at position 0) to the worst. Contained within each prediction object is the name of the corresponding gesture from the gestures file and a prediction score indicating how closely it matches the current gesture.

The code in the above method, therefore, takes the prediction at position 0 (the closest match) makes sure it has a score of greater than 1.0 and then displays a Toast message (an Android class designed to display notification pop ups to the user) displaying the name of the matching gesture.

Testing the Application

Build and run the application either on an AVD or a physical Kindle Fire device and perform the circle and swipe gestures on the display. When performed, the toast notification should appear containing the name of the gesture that was performed. Note, however, that when attempting to perform the X Gesture that the gesture is not recognized. Also note that when a gesture is recognized, it is outlined on the display with a bright yellow line whilst gestures about which the overlay is uncertain appear as a faded yellow line. Whilst useful during development, this is probably not ideal for a real world application. Clearly, therefore, there is still some more configuration work to do.

Configuring the GestureOverlayView

By default, GestureOverlayView is configured to display yellow lines during gestures and to recognize only single stroke gestures. Multi-stroke gestures can be detected by setting the android:gestureStrokeType property to multiple.

Similarly, the color used to draw recognized and unrecognized gestures can be defined via the android:gestureColor and android:uncertainGestureColor properties. For example, to hide the gesture lines and recognize multi-stroke gestures, modify the main.xml file in the example project as follows:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:orientation="vertical" >

<android.gesture.GestureOverlayView

android:id="@+id/gOverlay"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:gestureColor="#000"

android:uncertainGestureColor="#000"

android:gestureStrokeType="multiple" >

</android.gesture.GestureOverlayView>

</LinearLayout>

On re-running the application, gestures should now be invisible (since they are painted in black on a black background) and the X gesture should be recognized.

Intercepting Gestures

The GestureOverlayView is, as previously described, a transparent overlay that may be positioned over the top of other views. This leads to the question as to whether events intercepted by the gesture overlay should then be passed on to the underlying views when a gesture has been recognized. This is controlled via the android:eventsInterceptionEnabled property of the GestureOverlayView. When set to true, the gesture events are not passed to the underlying views when a gesture is recognized. This can be a particularly useful setting when gestures are being performed over a view that might be configured to scroll in response to certain gestures. Setting this property to true will avoid gestures also being interpreted as instructions to the underlying view to scroll in a particular direction.

Detecting Pinch Gestures

Before moving on from touch handling in general and gesture recognition on particular, the last topic of this chapter is that of handling pinch gestures. Whilst it is possible to create and detect a wide range of gestures using the steps outlined in the previous sections of this chapter it is, in actual fact, not possible to detect a pinching gesture (where two fingers are used in a stretching and pinching motion, typically to zoom in and out on a view or image) using the techniques discussed.

The simplest method for detecting pinch gestures is to use the Android ScaleGestureDetector class. In general terms, detecting pinch gestures involves the following steps:

1. Declaration of a new class which implements the OnScaleGestureListener interface including the required onScale(), onScaleBegin() and onScaleEnd() callback methods. 2. Creation of an instance of the ScaleGestureDetector class, passing through an instance of the class created in step 1 as an argument. 3. Implementing the onTouchEvent() callback method on the enclosing activity which, in turn, calls the onTouchEvent() method of the ScaleGestureDetector class.

In the remainder of this chapter we will create a very simple example designed to demonstrate the implementation of pinch gesture recognition.

A Pinch Gesture Example Project

Within the Eclipse environment, perform the usual steps to create an Android project named PinchExample with an appropriate package name using the Android 2.3.3, level 10 SDK and including a template activity.

Within the main.xml file, locate the TextView object and change the ID to myTextView. Locate and load the PinchExampleActivity.java file into the Eclipse editor and modify the file as follows:

package com.ebookfrenzy.PinchExample;

import android.app.Activity;

import android.os.Bundle;

import android.view.MotionEvent;

import android.view.ScaleGestureDetector;

import android.view.ScaleGestureDetector.OnScaleGestureListener;

import android.widget.TextView;

public class PinchExampleActivity extends Activity {

/** Called when the activity is first created. */

private ScaleGestureDetector scaleGestureDetector;

TextView statusText;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

statusText = (TextView)findViewById(R.id.myTextView);

scaleGestureDetector = new ScaleGestureDetector(this,

new MyOnScaleGestureListener());

}

public boolean onTouchEvent(MotionEvent event) {

scaleGestureDetector.onTouchEvent(event);

return true;

}

public class MyOnScaleGestureListener implements

OnScaleGestureListener {

public boolean onScale(ScaleGestureDetector detector) {

float scaleFactor = detector.getScaleFactor();

if(scaleFactor > 1){

statusText.setText("Zooming Out");

}else{

statusText.setText("Zooming In");

}

return true;

}

public boolean onScaleBegin(ScaleGestureDetector arg0) {

// Add code here if required

return false;

}

public void onScaleEnd(ScaleGestureDetector detector) {

// Add code here if required

}

}

}

The code begins by declaring TextView and ScaleGestureDetector variables. A new class named MyOnScaleGestureListener is declared which implements the Android OnScaleGestureListener interface. This interface requires that three methods (onScale(), onScaleBegin() and onScaleEnd()) be implemented. In this instance the onScale() method identifies the scale factor and displays a message on the text view indicating the type of pinch gesture detected.

Within the onCreate() method, a reference to the text view object is obtained and assigned to the statusText variable. Next, a new ScaleGestureDetector instance is created, passing through a reference to the enclosing activity and an instance of our new MyOnScaleGestureListener class as arguments. Finally, an onTouchEvent() callback method is implemented for the activity which simply calls the corresponding onTouchEvent() method of the ScaleGestureDetector object, passing through the MotionEvent object as an argument.

Compile and run the application on a physical Kindle Fire device and perform pinching gestures on the screen, noting that the text view displays either the zoom in or zoom out message depending on the pinching motion.

Summary

A gesture is essentially the motion of points of contact on a touch screen involving one or more strokes and can be used as a method of communication between user and application. Android allows gestures to be designed using the Gestures Builder application included with the AVD environment. Once created, gestures can be saved to a gestures file and loaded into an activity using the GestureLibrary at application runtime.

Gestures can be detected on areas of the display by overlaying existing views with instances of the transparent GestureOverlayView class and implementing an OnGesturePerformedListener event listener. Using the GestureLibrary, a ranked list of matches between a gesture performed by the user and the gestures stored in a gestures file may be generated, using a prediction score to decide whether a gesture is a close enough match.

Pinch gestures may be detected through the implementation of the ScaleGestureDetector class, an example of which was also provided in this chapter.

<google>BUY_KINDLE_FIRE</google>

| Previous | Table of Contents | Next |

| Android Touch and Multi-touch Event Handling | An Overview of Android Intents |