Difference between revisions of "An iOS 10 Speech Recognition Tutorial"

| Line 2: | Line 2: | ||

<seo title="An iOS 10 Speech Recognition Tutorial" titlemode="replace" keywords="ios 10, swift 3, speech recognition, tutorial, xcode 8" description="A tutorial outlining the steps involved in implementing speech recognition and speech to text conversion from within an iOS app using the SpeechKit framework."></seo> | <seo title="An iOS 10 Speech Recognition Tutorial" titlemode="replace" keywords="ios 10, swift 3, speech recognition, tutorial, xcode 8" description="A tutorial outlining the steps involved in implementing speech recognition and speech to text conversion from within an iOS app using the SpeechKit framework."></seo> | ||

<table border="0" cellspacing="0" width="100%"><tr> | <table border="0" cellspacing="0" width="100%"><tr> | ||

| − | <td width="20%">[[Playing Audio on iOS 8 using AVAudioPlayer|Previous]]<td align="center">[[iOS 8 App Development Essentials|Table of Contents]]<td width="20%" align="right">[[An iOS 10 Speech Recognition Tutorial|Next]]</td> | + | <td width="20%">[[Playing Audio on iOS 8 using AVAudioPlayer|Previous]]<td align="center">[[iOS 8 App Development Essentials|Table of Contents]]<td width="20%" align="right">[[An iOS 10 Real-Time Speech Recognition Tutorial|Next]]</td> |

<tr> | <tr> | ||

| − | <td width="20%">Playing Audio on iOS 10 using AVAudioPlayer<td align="center"><td width="20%" align="right">An iOS 10 Speech Recognition Tutorial</td> | + | <td width="20%">Playing Audio on iOS 10 using AVAudioPlayer<td align="center"><td width="20%" align="right">An iOS 10 Real-Time Speech Recognition Tutorial</td> |

</table> | </table> | ||

<hr> | <hr> | ||

| Line 169: | Line 169: | ||

<hr> | <hr> | ||

<table border="0" cellspacing="0" width="100%"><tr> | <table border="0" cellspacing="0" width="100%"><tr> | ||

| − | <td width="20%">[[Playing Audio on iOS 8 using AVAudioPlayer|Previous]]<td align="center">[[iOS 8 App Development Essentials|Table of Contents]]<td width="20%" align="right">[[An iOS 10 Speech Recognition Tutorial|Next]]</td> | + | <td width="20%">[[Playing Audio on iOS 8 using AVAudioPlayer|Previous]]<td align="center">[[iOS 8 App Development Essentials|Table of Contents]]<td width="20%" align="right">[[An iOS 10 Real-Time Speech Recognition Tutorial|Next]]</td> |

<tr> | <tr> | ||

| − | <td width="20%">Playing Audio on iOS 10 using AVAudioPlayer<td align="center"><td width="20%" align="right">An iOS 10 Speech Recognition Tutorial</td> | + | <td width="20%">Playing Audio on iOS 10 using AVAudioPlayer<td align="center"><td width="20%" align="right">An iOS 10 Real-Time Speech Recognition Tutorial</td> |

</table> | </table> | ||

Revision as of 02:24, 9 November 2016

| Previous | Table of Contents | Next |

| Playing Audio on iOS 10 using AVAudioPlayer | An iOS 10 Real-Time Speech Recognition Tutorial |

Learn SwiftUI and take your iOS Development to the Next Level |

When Apple introduced speech recognition for iOS devices, it was always assumed that this capability would one day be made available to iOS app developers. That day finally arrived with the introduction of iOS 10.

The iOS SDK now includes the Speech framework which can be used to implement speech to text transcription within any iOS 10 app. Speech recognition can be implemented with relative ease using the Speech framework and, as will be demonstrated in this chapter, may be used to transcribe both real-time and previously recorded audio.

An Overview of Speech Recognition in iOS

The speech recognition feature of iOS 10 allows speech to be converted to text and includes support for a wide range of spoken languages. Most iOS users will no doubt be familiar with the microphone button that appears within the keyboard when entering text into an app. This dictation button is perhaps most commonly used to enter text into the Message app.

Prior to the introduction of the Speech framework in iOS 10, app developers were still able to take advantage of the keyboard dictation button. Tapping a Text View object within any app displays the keyboard containing the button. Once tapped, any speech picked up by the microphone is transcribed into text and placed within the Text View. For basic requirements, this option is still available within iOS 10, though there are a number of advantages to performing a deeper level of integration using the Speech framework.

One of the key advantages offered by the Speech framework is the ability to trigger voice recognition without the need to display the keyboard and wait for the user to tap the dictation button. In addition, while the dictation button is only able to transcribe live speech, the Speech framework allows speech recognition to be performed on prerecorded audio files.

Another advantage over the built-in dictation button is that the app can define the spoken language that is to be transcribed where the dictation button is locked into the prevailing device wide language setting.

Behind the scenes, the service is using the same speech recognition technology as that used by Siri. It is also important to be aware that the audio is typically transferred from the local device to Apple’s remote servers where the speech recognition process is performed. The service is, therefore, only likely to be available when the device on which the app is running has an active internet connection.

It is important to note when working with speech recognition that the length of audio which can be transcribed in a single session is, at time of writing, restricted to one minute. Apple also imposes as yet undeclared limits on the total amount of time an app is able to make free use of the speech recognition service, the implication being that Apple will begin charging heavy users of the service at some point in the future.

Learn SwiftUI and take your iOS Development to the Next Level |

Speech Recognition Authorization

As outlined in the previous chapter, an app must seek permission from the user before being authorized to record audio using the microphone. This is also the case when implementing speech recognition, though the app must also specifically request permission to perform speech recognition. This is of particular importance given the fact that the audio will be transmitted to Apple for processing. In addition to an NSMicrophoneUsageDescription entry in the Info.plist file, the app must also include the NSSpeechRecognitionUsageDescription entry if speech recognition is to be performed.

The app must also specifically request speech recognition authorization via a call to the requestAuthorization method of the SFSpeechRecognizer class. This results in a completion handler call which is, in turn, passed a status value indicating whether authorization has been granted. Note that this step also includes a test to verify that the device has an internet connection.

Transcribing Recorded Audio

Once the appropriate permissions and authorizations have been obtained, speech recognition can be performed on an existing audio file with just a few lines of code. All that is required is an instance of the SFSpeechRecognizer class together with a request object in the form of an SFSpeechURLRecognitionRequest instance initialized with the URL of the audio file. A recognizer task is then created using the request object and a completion handler called when the audio has been transcribed. The following code fragment demonstrates these steps:

let recognizer = SFSpeechRecognizer()

let request = SFSpeechURLRecognitionRequest(url: fileUrl)

recognizer?.recognitionTask(with: request, resultHandler: {

(result, error) in

print(result?.bestTranscription.formattedString)

})

Learn SwiftUI and take your iOS Development to the Next Level |

Transcribing Live Audio

Live audio speech recognition makes use of the AVAudioEngine class. The AVAudioEngine class is used to manage audio nodes that tap into different input and output buses on the device. In the case of speech recognition, the engine’s input audio node is accessed and used to install a tap on the audio input bus. The audio input from the tap is then streamed to a buffer which is repeatedly appended to the speech recognizer object for conversion. These steps will be covered in greater detail in the next chapter entitled An iOS 10 Real-Time/Live Speech Recognition Tutorial.

An Audio File Speech Recognition Tutorial

The remainder of this chapter will modify the Record app created in the previous chapter to provide the option to transcribe the speech recorded to the audio file. In the first instance, load Xcode, open the Record project and select the Main.storyboard file so that it loads into the Interface Builder tool.

Modifying the User Interface

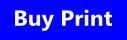

The modified Record app will require the addition of a Transcribe button and a Text View object into which the transcribed text will be placed as it is generated. Add these elements to the storyboard scene so that the layout matches that shown in Figure 98-1 below:

Figure 98-1

Select the Transcribe Button view, display the Auto Layout Align menu and apply a constraint to center the button in the horizontal center of the containing view. Display the Pin menu and establish a spacing to nearest neighbor constraint on the top edge of the view using the current value and with the Constrain to margins option disabled.

With the newly added Text View object selected, display the Attributes Inspector panel and delete the sample Latin text. Using the Pin menu, add spacing to nearest neighbor constraints on all four sides of the view with the Constrain to margins option enabled.

Display the Assistant Editor panel and establish outlet connections for the new Button and Text View named transcribeButton and textView respectively.

Complete this section of the tutorial by establishing an action connection from the Transcribe button to a method named transcribeAudio.

Learn SwiftUI and take your iOS Development to the Next Level |

Adding the Speech Recognition Permission

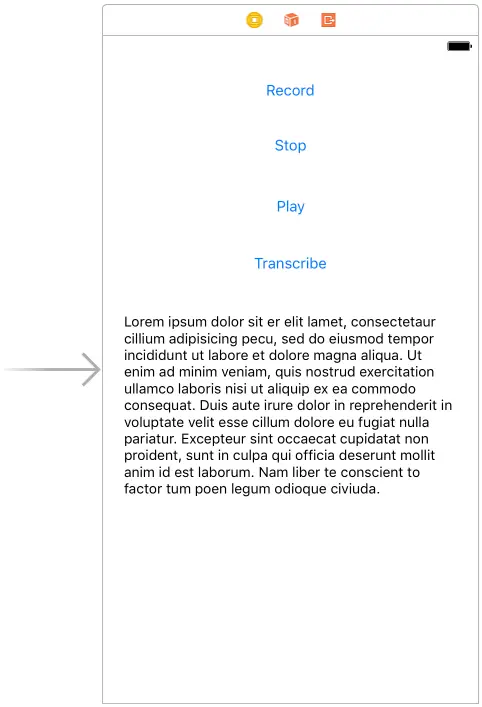

Select the Info.plist file, locate the bottom entry in the list of properties and hover the mouse pointer over the item. When the plus button appears, click on it to add a new entry to the list. From within the dropdown list of available keys, locate and select the Privacy – Speech Recognition Usage Description option as shown in Figure 98-2:

Figure 98-2

Within the value field for the property, enter a message to display to the user when requesting permission to use speech recognition. For example:

Speech recognition services are used by this app to convert speech to text.

Seeking Speech Recognition Authorization

In addition to adding the usage description key to the Info.plist file, the app must also include code to specifically seek authorization to perform speech recognition. This will also ensure that the device is suitably configured to perform the task and that the user has given permission for speech recognition to be performed. For the purposes of this example, the code to perform this task will be added as a method named authorizeSR within the ViewController.swift file as follows:

func authorizeSR() {

SFSpeechRecognizer.requestAuthorization { authStatus in

OperationQueue.main.addOperation {

switch authStatus {

case .authorized:

self.transcribeButton.isEnabled = true

case .denied:

self.transcribeButton.isEnabled = false

self.recordButton.setTitle("Speech recognition access denied by user", for: .disabled)

case .restricted:

self.transcribeButton.isEnabled = false

self.transcribeButton.setTitle("Speech recognition restricted on device", for: .disabled)

case .notDetermined:

self.transcribeButton.isEnabled = false

self.transcribeButton.setTitle("Speech recognition not authorized", for: .disabled)

}

}

}

}

Learn SwiftUI and take your iOS Development to the Next Level |

Note that the switch statement code is specifically performed on the main queue. This is because the completion handler can potentially be called at any time and not necessarily within the main thread queue. Since the completion handler code in the statement makes changes to the user interface, these changes must be made on the main queue to avoid unpredictable results. With the authorizeSR method implemented, modify the end of the viewDidLoad method to call this method:

override func viewDidLoad() {

super.viewDidLoad()

playButton.isEnabled = false

.

.

.

} catch let error as NSError {

print("audioSession error: \(error.localizedDescription)")

}

authorizeSR()

}

Performing the Transcription

All that remains before testing the app is to implement the code within the transcribeAudio action method. Locate the template method in the ViewController.swift file and modify it to read as follows:

@IBAction func transcribeAudio(_ sender: AnyObject) {

let recognizer = SFSpeechRecognizer()

let request = SFSpeechURLRecognitionRequest(

url: (audioRecorder?.url)!)

recognizer?.recognitionTask(with: request, resultHandler: {

(result, error) in

self.textView.text = result?.bestTranscription.formattedString

})

}

Learn SwiftUI and take your iOS Development to the Next Level |

Testing the App

Compile and run the app on a physical device, accept the request for speech recognition access, tap the Record button and record some speech. Tap the Stop button, followed by Transcribe and watch as the recorded speech is transcribed into text within the Text View object.

Summary

The Speech framework provides apps with access to the same speech recognition technology used by Siri. This allows speech to be transcribed to text, either in real-time, or by passing pre-recorded audio to the recognition system. This chapter has provided an overview of speech recognition within iOS 10 and adapted the Record app created in the previous chapter to transcribe recorded speech to text. The next chapter, entitled An iOS 10 Real-Time/Live Speech Recognition Tutorial, will provide a tutorial to performing speech recognition in real-time.

Learn SwiftUI and take your iOS Development to the Next Level |

| Previous | Table of Contents | Next |

| Playing Audio on iOS 10 using AVAudioPlayer | An iOS 10 Real-Time Speech Recognition Tutorial |